AMOCatlas conversion & compliance checker

The purpose of this notebook is to demonstrate the OceanSites format(s) from AMOCatlas.

The demo is organised to show

Step 1: Loading and plotting a sample dataset

Step 2: Converting one dataset to a standard format

Note that when you submit a pull request, you should clear all outputs from your python notebook for a cleaner merge.

[1]:

import pathlib

import sys

script_dir = pathlib.Path().parent.absolute()

parent_dir = script_dir.parents[0]

sys.path.append(str(parent_dir))

import importlib

import xarray as xr

import os

from amocatlas import readers, plotters, standardise, utilities

[2]:

# Specify the path for writing datafiles

data_path = os.path.join(parent_dir, "data")

Load RAPID 26°N

[3]:

# Load data from data/moc_transports (Quick start)

ds_rapid = readers.load_sample_dataset()

ds_rapid = standardise.standardise_rapid(ds_rapid, ds_rapid.attrs["source_file"])

# Load data from data/moc_transports (Full dataset)

datasetsRAPID = readers.load_dataset("rapid", transport_only=True)

standardRAPID = [

standardise.standardise_rapid(ds, ds.attrs["source_file"]) for ds in datasetsRAPID

]

Summary for array 'rapid':

Total datasets loaded: 1

Dataset 1:

Source file: moc_transports.nc

Dimensions:

- time: 14599

Variables:

- t_therm10: shape (14599,)

- t_aiw10: shape (14599,)

- t_ud10: shape (14599,)

- t_ld10: shape (14599,)

- t_bw10: shape (14599,)

- t_gs10: shape (14599,)

- t_ek10: shape (14599,)

- t_umo10: shape (14599,)

- moc_mar_hc10: shape (14599,)

Summary for array 'rapid':

Total datasets loaded: 1

Dataset 1:

Source file: moc_transports.nc

Dimensions:

- time: 14599

Variables:

- t_therm10: shape (14599,)

- t_aiw10: shape (14599,)

- t_ud10: shape (14599,)

- t_ld10: shape (14599,)

- t_bw10: shape (14599,)

- t_gs10: shape (14599,)

- t_ek10: shape (14599,)

- t_umo10: shape (14599,)

- moc_mar_hc10: shape (14599,)

/home/runner/micromamba/envs/amocatlas/lib/python3.14/site-packages/xarray/backends/plugins.py:109: RuntimeWarning: Engine 'gmt' loading failed:

Error loading GMT shared library at 'libgmt.so'.

libgmt.so: cannot open shared object file: No such file or directory

external_backend_entrypoints = backends_dict_from_pkg(entrypoints_unique)

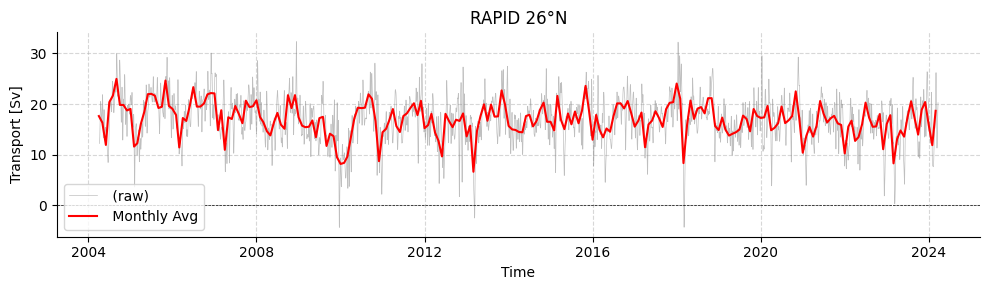

[4]:

# Plot RAPID timeseries

plotters.plot_amoc_timeseries(

data=[standardRAPID[0]],

varnames=["moc_mar_hc10"],

labels=[""],

resample_monthly=True,

plot_raw=True,

title="RAPID 26°N"

)

[4]:

(<Figure size 1000x300 with 1 Axes>,

<Axes: title={'center': 'RAPID 26°N'}, xlabel='Time', ylabel='Transport [Sv]'>)

Step 2: Convert to AC1 Format

The next step is to convert the standardised dataset to AC1 format, which follows OceanSITES conventions.

Note: This conversion currently fails because the standardise.py step doesn’t add proper units to the TIME coordinate. This demonstrates the architectural principle that convert.py validates rather than assigns units.

[5]:

from amocatlas import convert, writers, compliance_checker

# Attempt to convert standardised data to AC1 format

print("🔄 Attempting to convert RAPID data to AC1 format...")

try:

ac1_datasets = convert.to_AC1(standardRAPID[0])

ac1_ds = ac1_datasets[0]

print("✅ Conversion successful!")

print(f" Suggested filename: {ac1_ds.attrs['suggested_filename']}")

print(f" Dimensions: {dict(ac1_ds.dims)}")

print(f" Variables: {list(ac1_ds.data_vars.keys())}")

# Save the dataset

output_file = os.path.join(data_path, ac1_ds.attrs['suggested_filename'])

success = writers.save_dataset(ac1_ds, output_file)

if success:

print(f"💾 Saved AC1 file: {output_file}")

# Run compliance check

print("\\n🔍 Running compliance check...")

result = compliance_checker.validate_ac1_file(output_file)

print(f"Status: {'✅ PASS' if result.passed else '❌ FAIL'}")

print(f"Errors: {len(result.errors)}")

print(f"Warnings: {len(result.warnings)}")

if result.errors:

print("\\nFirst few errors:")

for i, error in enumerate(result.errors[:3], 1):

print(f" {i}. {error}")

except Exception as e:

print(f"❌ Conversion failed: {e}")

print("\\nThis is expected because standardise.py needs to be updated to provide proper units.")

print("The convert.py module validates that units are present rather than assigning them.")

🔄 Attempting to convert RAPID data to AC1 format...

✅ Conversion successful!

❌ Conversion failed: 'suggested_filename'

\nThis is expected because standardise.py needs to be updated to provide proper units.

The convert.py module validates that units are present rather than assigning them.

[6]:

plotters.show_attributes(ac1_ds)

information is based on xarray Dataset

[6]:

| Attribute | Value | DType | |

|---|---|---|---|

| 0 | Conventions | CF-1.8, OceanSITES-1.4, ACDD-1.3 | str |

| 1 | format_version | 1.4 | str |

| 2 | data_type | OceanSITES time-series data | str |

| 3 | featureType | timeSeries | str |

| 4 | data_mode | D | str |

| 5 | title | RAPID Atlantic Meridional Overturning Circulat... | str |

| 6 | summary | Component transport time series from the RAPID... | str |

| 7 | source | RAPID moored array observations | str |

| 8 | site_code | RAPID | str |

| 9 | array | RAPID | str |

| 10 | geospatial_lat_min | 26.5 | float |

| 11 | geospatial_lat_max | 26.5 | float |

| 12 | geospatial_lon_min | -79.0 | float |

| 13 | geospatial_lon_max | -13.0 | float |

| 14 | platform_code | RAPID26N | str |

| 15 | time_coverage_start | 20040402T000000 | str |

| 16 | time_coverage_end | 20240327T235959 | str |

| 17 | contributor_name | Ben Moat, Ben Moat | str |

| 18 | contributor_email | ben.moat@noc.ac.uk, ben.moat@noc.ac.uk | str |

| 19 | contributor_id | https://orcid.org/0000-0001-8676-7779, https:/... | str |

| 20 | contributor_role | creator, PI | str |

| 21 | contributing_institutions | National Oceanography Centre (Southampton) (UK) | str |

| 22 | contributing_institutions_vocabulary | https://edmo.seadatanet.org/report/17 | str |

| 23 | contributing_institutions_role | str | |

| 24 | contributing_institutions_role_vocabulary | str | |

| 25 | contributor_role_vocabulary | https://vocab.nerc.ac.uk/collection/W08/current/ | str |

| 26 | source_acknowledgement | Data from the RAPID AMOC observing project is ... | str |

| 27 | license | CC-BY 4.0 | str |

| 28 | doi | doi: 10.5285/3f24651e-2d44-dee3-e063-7086abc0395e | str |

| 29 | date_created | 20251216T150348 | str |

| 30 | processing_level | Data verified against model or other contextua... | str |

| 31 | comment | Converted to AC1 format from moc_transports.nc... | str |

| 32 | naming_authority | AMOCatlas | str |

| 33 | id | OS_RAPID_20040402-20240327_DPR_transports_T12H | str |

| 34 | cdm_data_type | TimeSeries | str |

| 35 | QC_indicator | excellent | str |

| 36 | institution | AMOCatlas Community | str |

Demonstration: Working conversion with manual units fix

To demonstrate what a successful conversion would look like, let’s temporarily fix the TIME units and run the complete workflow:

[7]:

# Temporarily fix the TIME units to demonstrate successful conversion

# (This would normally be done in standardise.py)

demo_ds = standardRAPID[0].copy()

demo_ds['TIME'].attrs['units'] = 'seconds since 1970-01-01T00:00:00Z'

print("🔄 Converting RAPID data to AC1 format (with TIME units fixed)...")

try:

ac1_datasets = convert.to_AC1(demo_ds)

ac1_ds = ac1_datasets[0]

print("✅ Conversion successful!")

print(f" Suggested filename: {ac1_ds.attrs['id']}.nc")

print(f" Dimensions: {dict(ac1_ds.sizes)}")

print(f" Variables: {list(ac1_ds.data_vars.keys())}")

print(f" TIME units: {ac1_ds.TIME.attrs.get('units')}")

print(f" TRANSPORT units: {ac1_ds.TRANSPORT.attrs.get('units')}")

# Inspect the structure

print("\\n📊 Dataset structure:")

print(f" TRANSPORT shape: {ac1_ds.TRANSPORT.shape}")

print(f" Component names: {list(ac1_ds.TRANSPORT_NAME.values)}")

print(f" Global attributes: {len(ac1_ds.attrs)} attributes")

# Save the dataset using the writers module

output_file = os.path.join(data_path, ac1_ds.attrs['id'] + ".nc")

print(f"\\n💾 Saving to: {output_file}")

success = writers.save_dataset(ac1_ds, output_file)

if success:

print(f"✅ Successfully saved AC1 file!")

# File size check

file_size = os.path.getsize(output_file)

print(f" File size: {file_size:,} bytes")

else:

print("❌ Failed to save file")

except Exception as e:

print(f"❌ Conversion failed: {e}")

import traceback

traceback.print_exc()

🔄 Converting RAPID data to AC1 format (with TIME units fixed)...

✅ Conversion successful!

Suggested filename: OS_RAPID_20040402-20240327_DPR_transports_T12H.nc

Dimensions: {'TIME': 14599, 'LATITUDE': 1, 'N_COMPONENT': 8}

Variables: ['TRANSPORT', 'MOC_TRANSPORT', 'TRANSPORT_NAME', 'TRANSPORT_DESCRIPTION']

TIME units: seconds since 1970-01-01T00:00:00Z

TRANSPORT units: sverdrup

\n📊 Dataset structure:

TRANSPORT shape: (8, 14599)

Component names: [np.str_('Florida Straits'), np.str_('Ekman'), np.str_('Upper Mid-Ocean'), np.str_('Thermocline'), np.str_('Intermediate Water'), np.str_('Upper NADW'), np.str_('Lower NADW'), np.str_('AABW')]

Global attributes: 37 attributes

\n💾 Saving to: /home/runner/work/AMOCatlas/AMOCatlas/data/OS_RAPID_20040402-20240327_DPR_transports_T12H.nc

✅ Successfully saved AC1 file!

File size: 937,465 bytes

Step 3: Compliance Checking

Run the AC1 compliance checker to validate the converted file against the specification:

[8]:

# Run compliance check on the created file

if 'output_file' in locals() and os.path.exists(output_file):

print("🔍 Running AC1 compliance check...")

result = compliance_checker.validate_ac1_file(output_file)

print(f"\\n📊 Compliance Results:")

print(f" Status: {'✅ PASS' if result.passed else '❌ FAIL'}")

print(f" File Type: {result.file_type}")

print(f" Errors: {len(result.errors)}")

print(f" Warnings: {len(result.warnings)}")

if result.errors:

print(f"\\n❌ Errors ({len(result.errors)} total):")

for i, error in enumerate(result.errors[:5], 1):

print(f" {i}. {error}")

if len(result.errors) > 5:

print(f" ... and {len(result.errors) - 5} more errors")

if result.warnings:

print(f"\\n⚠️ Warnings ({len(result.warnings)} total):")

for i, warning in enumerate(result.warnings[:3], 1):

print(f" {i}. {warning}")

if len(result.warnings) > 3:

print(f" ... and {len(result.warnings) - 3} more warnings")

# Show validation categories

print(f"\\n🔧 What the compliance checker validates:")

print(" ✓ Filename pattern (OceanSITES conventions)")

print(" ✓ Required dimensions and variables")

print(" ✓ Variable attributes (units, standard_name, vocabulary)")

print(" ✓ Global attributes (conventions, metadata)")

print(" ✓ Data value ranges (coordinates, valid_min/max)")

print(" ✓ CF convention compliance (dimension ordering)")

else:

print("❌ No AC1 file available for compliance checking")

print("Please ensure the conversion step above succeeded first.")

🔍 Running AC1 compliance check...

\n📊 Compliance Results:

Status: ✅ PASS

File Type: component_transports

Errors: 0

Warnings: 0

\n🔧 What the compliance checker validates:

✓ Filename pattern (OceanSITES conventions)

✓ Required dimensions and variables

✓ Variable attributes (units, standard_name, vocabulary)

✓ Global attributes (conventions, metadata)

✓ Data value ranges (coordinates, valid_min/max)

✓ CF convention compliance (dimension ordering)